Are we on the precipice of developing autonomous killer robots; unmanned technologies that will completely dominate conflict, remorselessly, perfectly? Some advocates think so but this article explains why the killer robot view is misplaced and in its misdirection, draws attention away from how militaries already use artificial intelligence and machine learning technologies. The article draws upon operational constraints, a realistic view of technology, legal constraints, and even cultural aspects of the military to explain that Terminator-like devices are not the aspiration of militaries.

A lack of familiarity with military operations causes some advocates and academics to imagine fully automated offensives. The reality is that militaries carefully study adversaries and employ multiple strategies to control attacks in order to reach strategic goals, to avoid ambiguity and escalation, and to avoid civilian death. Prosaic functions that escape public attention provide the inputs for this study and control.

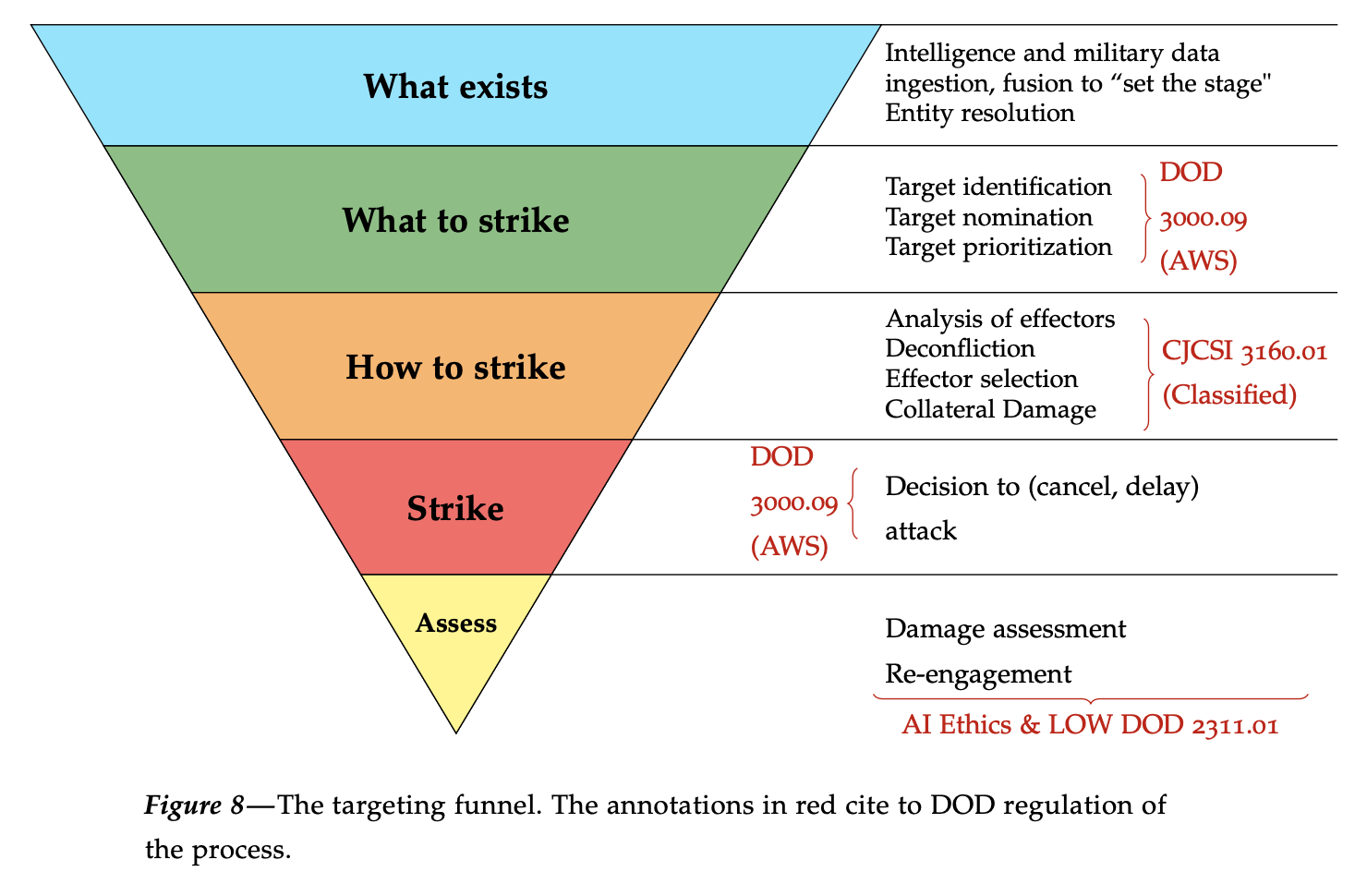

This article explains legal, cultural, and operational limitations that operate on military procurement and decisionmaking with a focus on a topic ignored in the killer robot literature—the targeting process. With a better understanding of the wants, needs, and limitations of the military, a more aligned view points to opportunities for meliorative intervention.